Project Overview

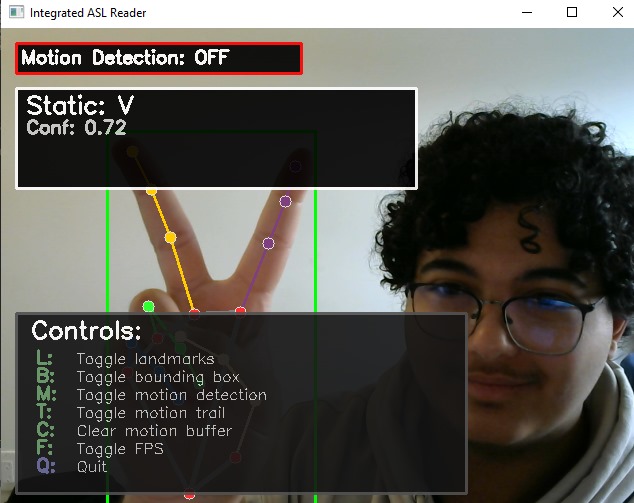

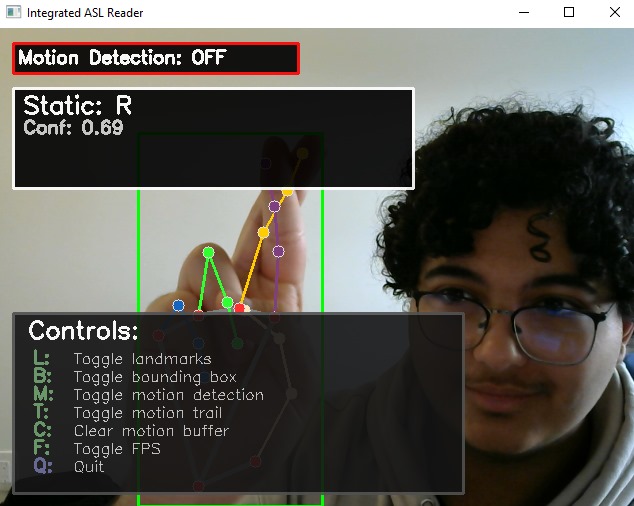

Handspeak is an innovative AI-powered application that uses computer vision and machine learning to recognize and translate American Sign Language (ASL) in real-time. Inspired by my experience in BCHACKS 2024, where another team used computer vision and led me to research it on my own, this project started as a school assignment in early 2025 and has evolved into a comprehensive personal project that recognizes all 26 ASL letters, including motion-based letters like J and Z.

Key Features

- Real-time sign language recognition using webcam

- Complete support for all 26 ASL letters including motion-based letters (J, Z)

- Advanced hand landmark detection and tracking

- Live translation to text and speech output

- Computer vision-based gesture recognition

- Cross-platform compatibility

Technical Implementation

The system leverages advanced computer vision techniques with OpenCV for hand detection and tracking, combined with machine learning models for gesture classification. The application processes video frames in real-time to detect hand gestures and classify them into corresponding ASL letters, with special handling for motion-based letters like J and Z.

Key technical components include:

- MediaPipe for precise hand landmark detection

- Custom CNN model trained on ASL letter datasets

- Real-time video processing pipeline

- Motion tracking for dynamic gestures (J, Z)

- Text-to-speech integration

- User interface built with Tkinter

Project Journey & Results

What started as a hackathon-inspired school project has grown into a comprehensive personal project that demonstrates advanced computer vision and machine learning capabilities. The system successfully recognizes all 26 ASL letters, including the challenging motion-based letters J and Z, showcasing the potential of AI in accessibility and inclusive technology.

The application has been tested with various lighting conditions and hand positions, showing robust performance across different environments. The project represents a significant technical achievement in real-time gesture recognition and serves as a foundation for future expansion into more complex ASL vocabulary and sentence-level recognition.