Project Overview

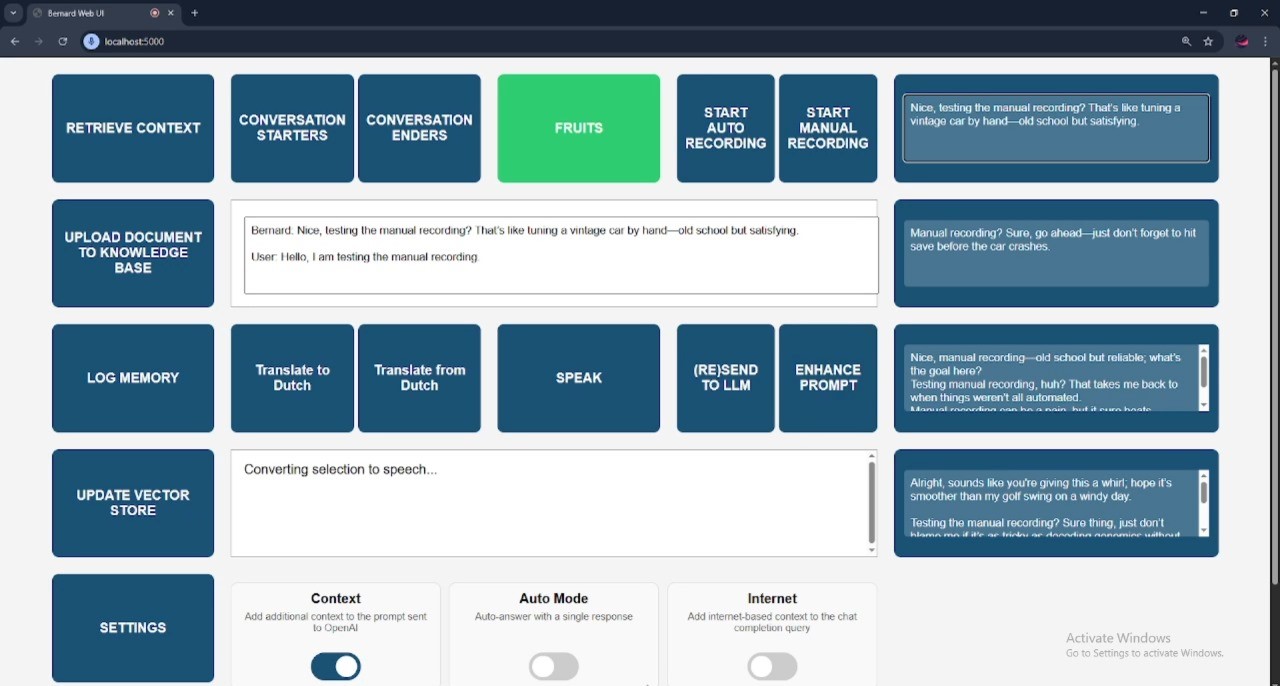

This intelligent personal AI assistant was developed for a client with ALS to assist with communication challenges. The system features two distinct interaction modes: voice-first conversations with real-time speech processing, and LLM-powered chat with context-aware responses. Built for the Scott-Morgan Foundation, this project demonstrates how advanced AI integration can create meaningful impact for individuals with accessibility needs.

Key Features

🎤

Real-time Voice Processing

Live audio recording, speech recognition, and text-to-speech synthesis with automatic language detection

🧠

Multi-Model AI Integration

Supports both OpenAI GPT-4 and Groq's Llama models with intelligent model selection and switching

📚

Intelligent Document Retrieval

RAG (Retrieval-Augmented Generation) system with vector embeddings and semantic search capabilities

🌐

Multi-language Support

Automatic language detection and translation capabilities for global accessibility

⚡

Parallel Processing

Optimized pipeline for handling multiple concurrent operations and real-time communication

💾

Persistent Memory

Chat history and context preservation across sessions with intelligent context management

Technical Implementation

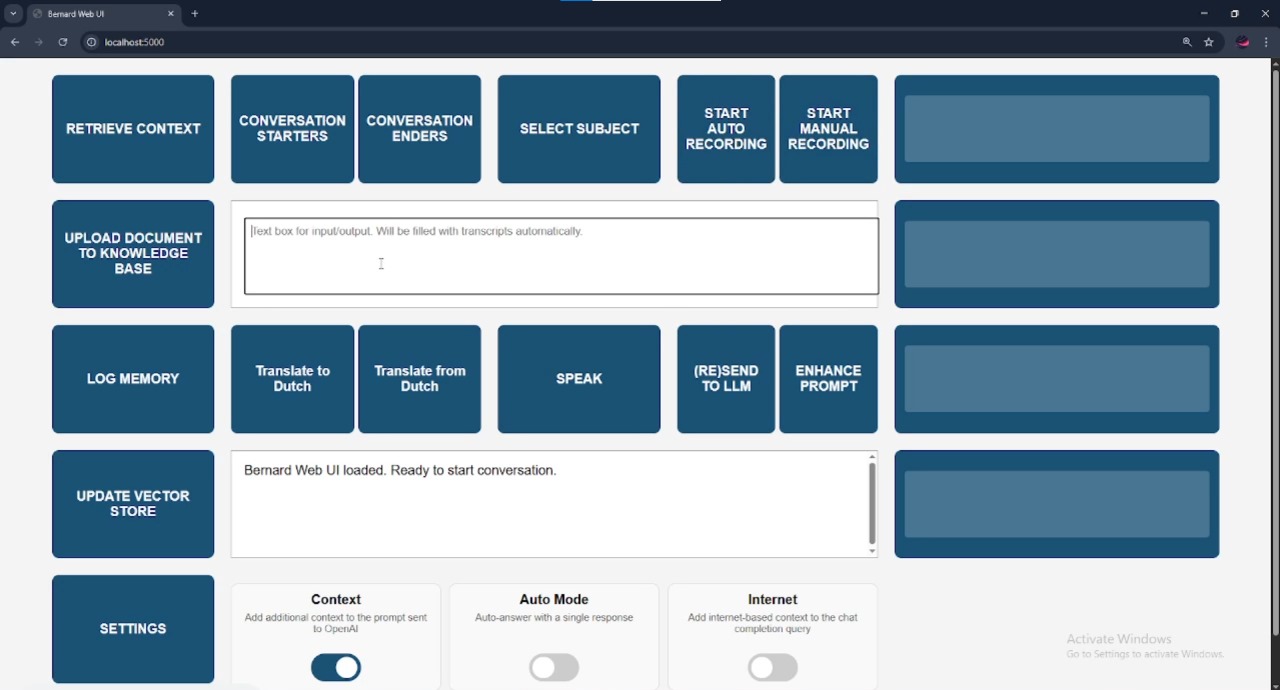

The system is built with a modular architecture that separates concerns into dedicated classes for audio processing, embeddings management, and pipeline orchestration. The backend uses Flask with WebSocket support for real-time bidirectional communication, while the frontend features a modern responsive design.

Backend Architecture:

- Flask Application: RESTful API with WebSocket endpoints for real-time communication

- Audio Processing: PyAudio for live recording, SpeechRecognition for transcription, PyDub for audio manipulation

- AI Integration: OpenAI API for GPT-4, Groq API for Llama models, LangChain for orchestration

- Vector Database: FAISS for efficient similarity search and document retrieval

- Document Processing: PDF parsing, text chunking, and embedding generation using sentence transformers

Advanced Features:

- Async Processing: Concurrent handling of audio recording, transcription, and AI inference

- Vector Search: Semantic document retrieval using sentence transformers and FAISS indexing

- Real-time Communication: WebSocket-based bidirectional communication with error handling

- Error Handling: Robust exception handling and graceful degradation for production use

- Scalable Design: Modular architecture supporting easy extension and maintenance

Impact & Results

Bernard represents a significant advancement in personal AI assistants, combining cutting-edge language models with practical real-world applications. The system demonstrates expertise in full-stack AI development, real-time audio processing, and modern web application architecture.

Key achievements include successful integration of multiple AI service providers, implementation of a production-ready RAG system, and creation of an intuitive user interface that makes advanced AI capabilities accessible to end users. The project showcases the ability to work with cutting-edge technologies while maintaining code quality and system reliability.